8 A/B Tests to Optimise Your Referral Marketing Metrics in 2025

Did you know A/B testing by cohort typically increases referral marketing performance by 4x within six months?

It might seem like the easy option, but an email blast to your entire customer base is a risky strategy. At best, it’ll resonate with a small proportion of your customers. At worst, it could alienate the majority. Nobody likes receiving offers for things they’ve already bought (especially if they could’ve bought them for less).

That’s where segmentation and A/B testing comes in.

By strategically segmenting your customers and A/B testing every element through their journey, you can gather valuable insight into what best engages different customers, when.

You could learn, for example, that first-time customers are more likely to return when offered a complimentary gift with their next purchase. Or that high-spending males are more likely to refer friends on their third visit.

Or that referred second-time female customers aged 25 to 30 who spend an average of £60 per order and give an NPS of 6 are most likely to engage with messaging about your new collection. That's how deeply you can understand your customers' behaviour with the right tools and strategy.

Read on for eight A/B tests to inspire your campaigns in 2025.

What Is A/B Testing in Marketing?

A/B testing, also known as split testing, is a marketing experiment where you compare two versions of a campaign element—such as an email subject line, referral incentive, or call-to-action (CTA)—to see which performs better. You divide your audience into two (or more) groups, show each group a different variation, and measure which version drives the desired outcome more effectively.

In referral marketing, A/B testing might involve testing different incentives (like £10 off vs. 20% off), messages (e.g. “Give £10, Get £10” vs. “Get £10 when your friend shops”), or share methods (email vs. WhatsApp). It’s a data-driven way to optimise performance based on real customer behaviour—not guesses.

When done correctly, A/B testing can give marketers a clear roadmap for what resonates with different customer segments, helping you fine-tune your campaigns for better engagement, higher conversion rates, and measurable ROI.

If you’re serious about iterating and improving results based on evidence, a referral marketing platform gives you the measurement framework to run AB tests and see what truly impacts referral performance.

How A/B Testing Can Help Referral Marketers

Referral marketing naturally lends itself to iterative testing and optimisation. You're dealing with multiple customer touchpoints—introducing the programme, encouraging sharing, converting the referred friend, and re-engaging both parties afterward. That means more opportunities to experiment, learn, and refine.

Here are just a few ways A/B testing strengthens referral campaigns:

Pinpoints what motivates your various customer groups to refer: Whether it’s a reward, recognition, social impact, or exclusivity, testing helps uncover which motivation works best for each cohort.

Optimises referral CTA placement and language: Small changes to where and how you ask customers to refer can have a big impact.

Surfaces winning incentive structures: Understanding whether customers prefer “give and get” offers, loyalty points, free products, or tiered discounts helps you match rewards to audience preferences.

Boosts referral share and conversion rates: By continuously testing formats (e.g., image layout, share methods), you'll increase how often people refer and how many friends actually make a purchase.

Informs wider retention and acquisition strategy: Learnings from referral tests can also guide your broader lifecycle marketing—everything from onboarding flows to loyalty campaigns.

Put simply: referral marketing driven by testing and segmentation doesn’t just perform better—it becomes a powerful channel for learning what makes your customers tick.

Image tests

1. Background colour

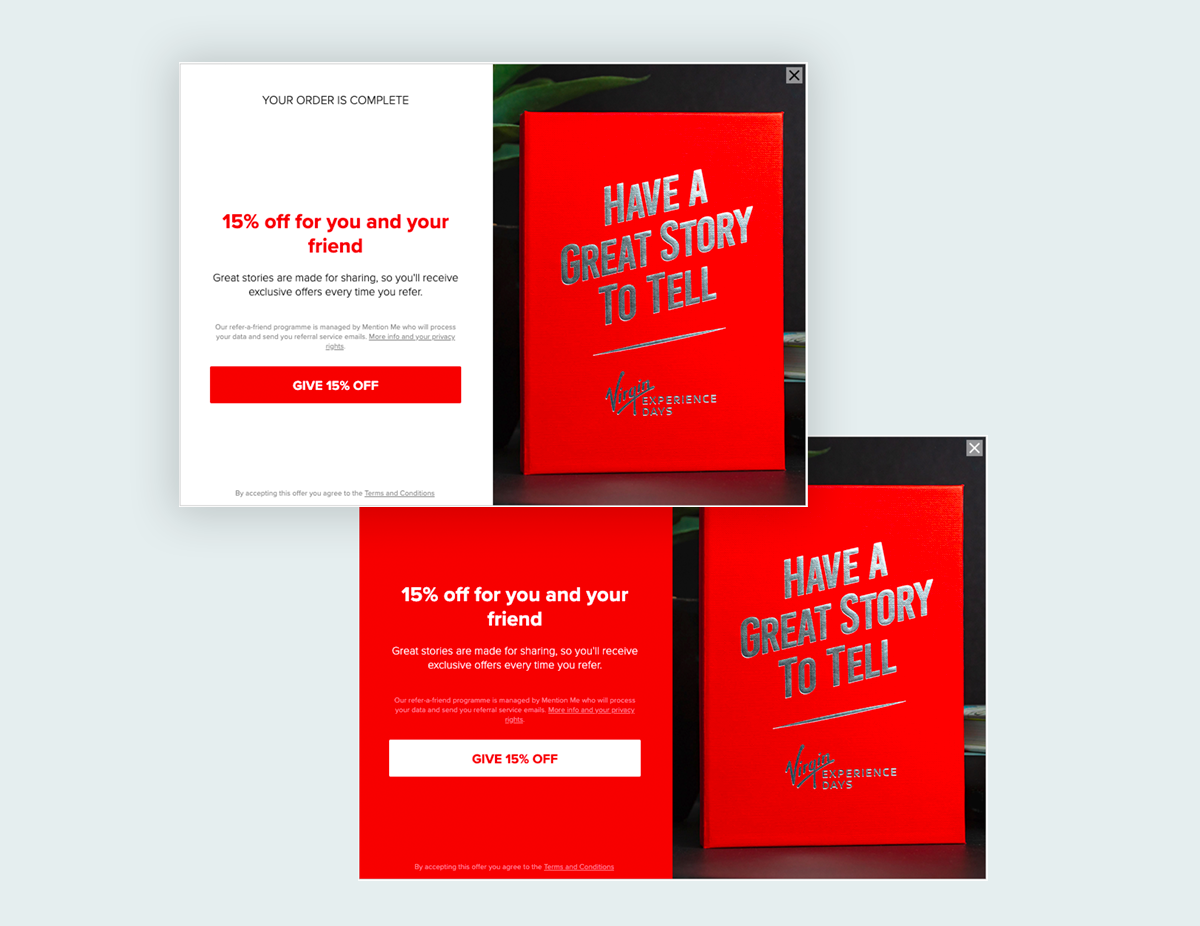

When you think of Virgin Experience Days, it's likely the brand's iconic red that springs to mind. That's the colour the experience provider used as its referral overlay background before deciding to experiment with changing it to white.

Much to its surprise, a white background increased the likelihood of people sharing Virgin Experience Day's referral offers and introducing their friends. It's now used as standard across its refer-a-friend campaigns.

Winner: white background

Incentive tests

2. Discount type

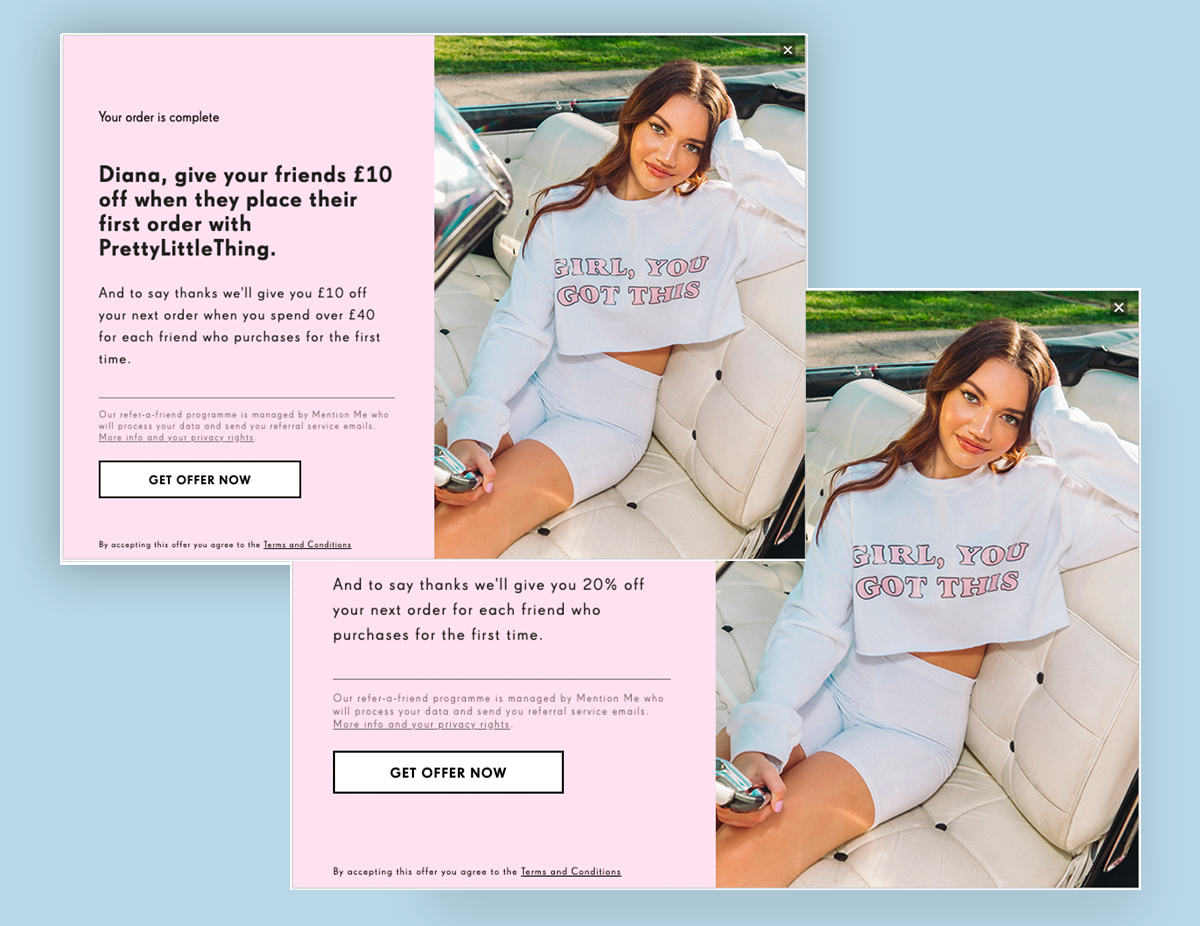

A common starting point for refer-a-friend AB tests is percentage versus fixed-value discount. This is the route PrettyLittleThing took when it first launched referral. It offered £10 off (with a £40 minimum spend) versus 20% off to discover which incentive got people talking and introducing their friends to the fast fashion brand.

The results were interesting: while the fixed-sum was shared more, it converted to fewer new customers. New customer acquisition was a priority for PLT, so it declared 20% off the winner.

Winner: percentage discount

“Experimenting with A/B tests has given us valuable insight into our customers and promoting referral has been a great way to reiterate our brand’s message. We can’t wait to see what referral helps us to achieve next!” - Abbie Hodgson, Assistant CRM Manager, PrettyLittleThing

3. Discount level

The bigger the discount, the better the offer, right? Not according to No1 Lounges' customers.

The airport lounge provider experimented with a £10 versus £7.50 incentive for referrers and their friends. Much to its surprise, it discovered the lower discount recruited 29% more new customers and generated 42% more revenue.

Winner: £7.50 discount

Check out our No1 Lounges' case study here

4. discount based on customer spend

Full disclosure: this example isn’t strictly an A/B test. It is, however, a great example of how you can tailor messaging to customer behaviour.

FatFace wanted to increase the average order value (AOV) of low-spending customers, while also encouraging first-time customers to return quickly. It subsequently experimented with showing different engagement content to new customers based on their first order value.

It offered £10 off with a minimum spend of £50 to low-spending customers, and 15% off with no minimum spend to high-spenders.

Tempted by the chance to save £10, a significant proportion of low-spenders spent more than £50 on their second shop, driving up FatFace’s AOV. Better yet, the time limit on both offers meant customers repeat purchased more quickly, too.

Winner: £10 off with minimum spend

5. Gifts

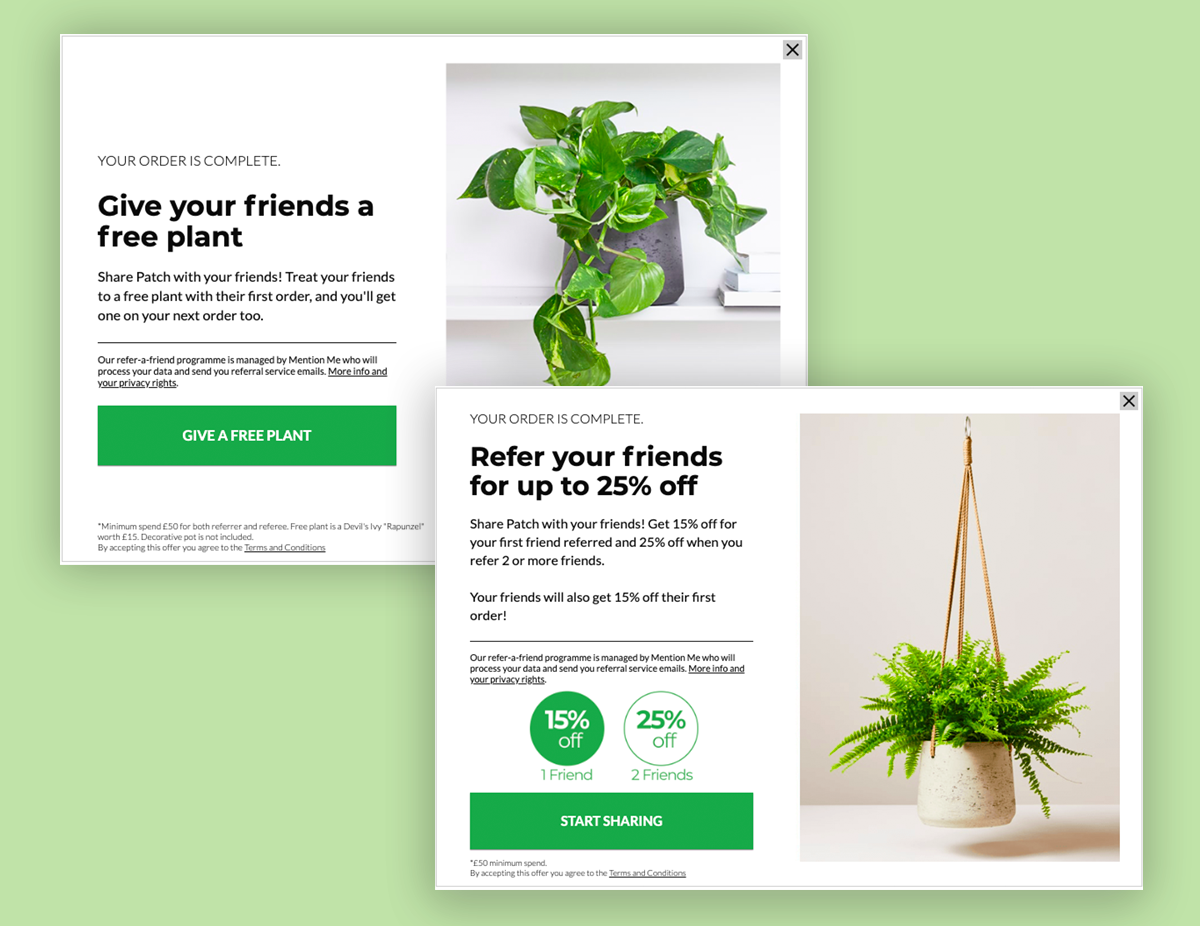

Discounts aren't the only way to get people talking. London-based plant company Patch experimented with tiered rewards versus a free plant as a referral incentive.

Half its customers were offered a discount based on how many friends they referred (15% for one friend and 25% off for two or more), while the other half were offered a free plant for both them and their friend. Within two weeks, the gift proved significantly more effective, increasing Patch's referral share rate by 49%.

Winner: free gift

Copy tests

Incentives are an important element of a successful referral channel – but they're not the only area worth experimenting with. Sometimes, seemingly minor tweaks can make a big difference.

6. Give vs. Get

Referral rewards usually fall into two categories: give-only (where the customer’s friend gets a discount but the referrer does not) and give/get (where both parties benefit). But which model drives better results?

When one consumer brand tested these strategies head-to-head, they found some surprising results. Offering a “give-only” discount initially led to more referrals being sent—customers were happy to treat their friends. But those emails generated fewer actual conversions. On the other hand, the “give/get” structure—with identical values for both parties—increased overall conversion and long-term customer value by 31%.

Why? When customers have skin in the game, they're more likely to actively promote the brand—and their friends are more likely to act knowing their referral helps both of them.

The takeaway: what seems more generous on the surface isn’t always more effective. A/B testing “give” vs. “give/get” structures will show you what truly motivates your customers to spread the word and how to structure incentives for maximum impact.

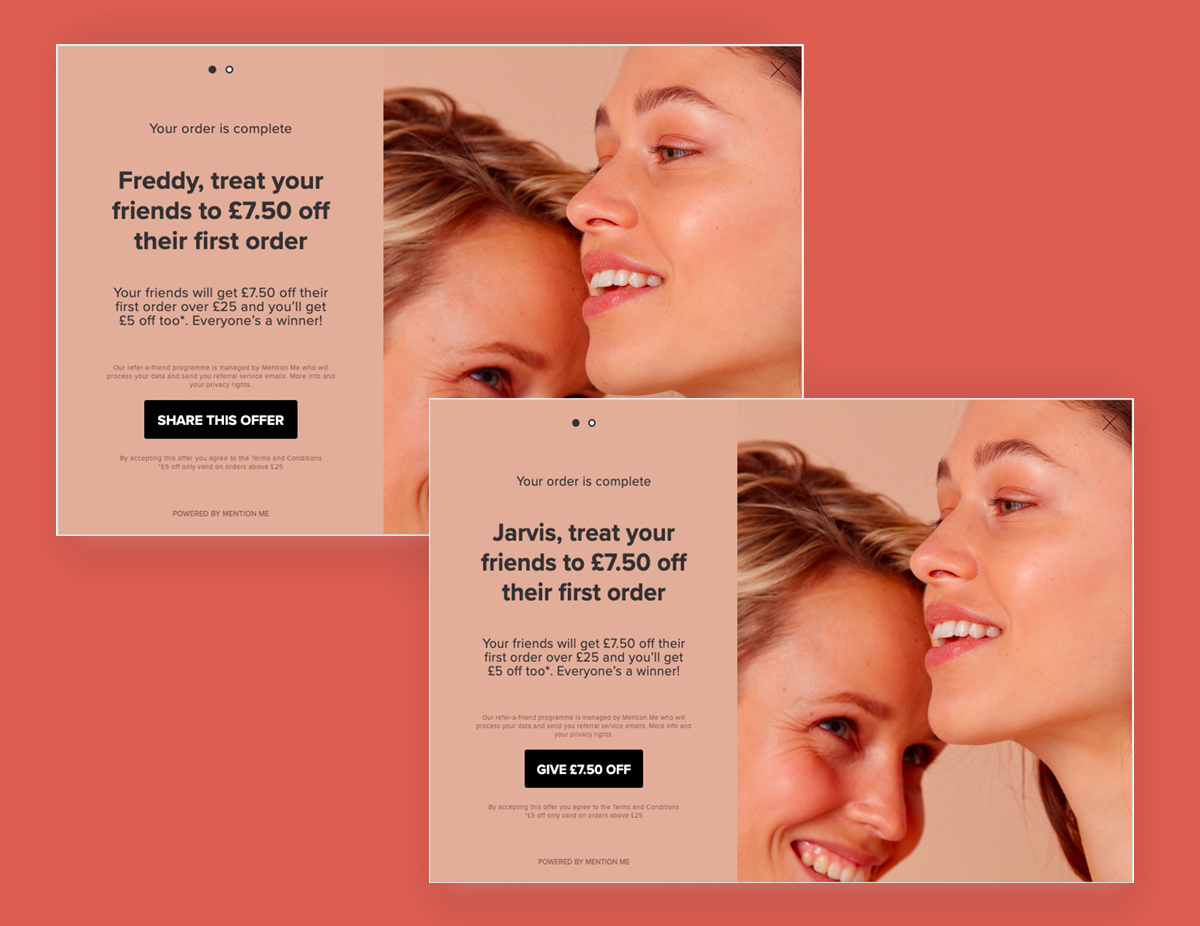

7. Call-to-action

Our eyes are naturally drawn to an offer's CTA, so it's important the copy there is engaging enough for customers to click it.

Paula's Choice experimented with 'Share this offer' versus 'Give 7.50 off' as its CTA copy. By emphasising why customers should refer the skincare brand, it increased the number of people sharing and converting friends into new customers.

Winner: reward CTA

Share Options

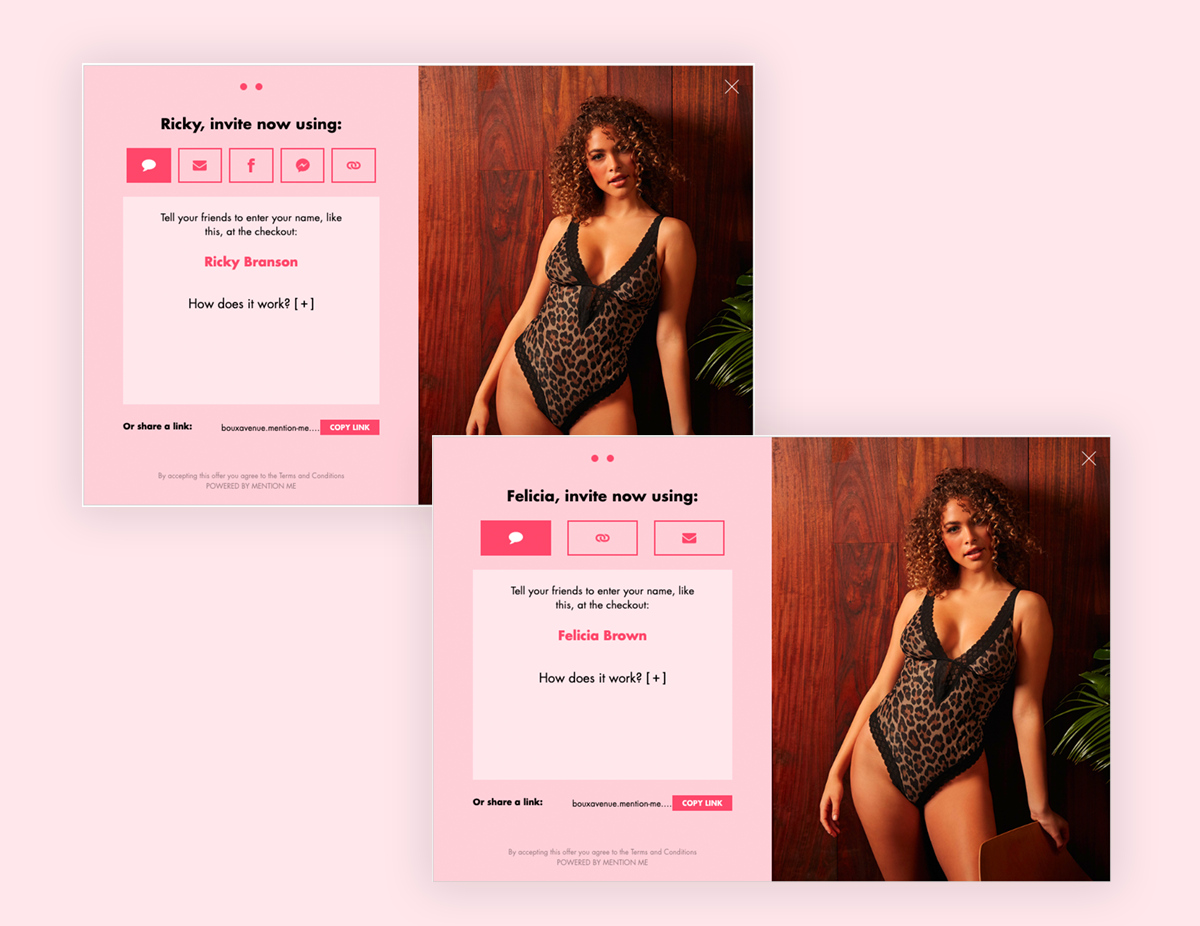

8. Number of share options

Boux Avenue originally offered five sharing options to referrers: name sharing, email, Facebook, WhatsApp and link sharing. It wanted to see if people engaged more with fewer options, so AB test removing Facebook for two weeks.

Interestingly, it found less isn't always more. The control group with all five sharing options delivered a higher share rate and more overall revenue for the lingerie brand.

Winner: 5 sharing options

Creating customer segments

For your A/B tests to be effective, you need enough customers to know results are accurate. Four people clicking the red CTA versus five clicking the blue CTA doesn’t prove blue is more effective. 500 people clicking the red CTA versus 2,000 people clicking the blue, however, does. This is known as reaching statistical significance. Ending a test before it reaches statistical significance means your results may simply be a coincidence.

This is important to bear in mind when it comes to segmentation. The more you segment your customers, the more you’re splitting traffic and the longer it’ll take to reach statistical significance. This might not be such a problem if you have thousands of customers every month, but for smaller companies, it's something worth considering.

So you can start running tests and optimising your programme quickly, we recommend starting with basic segmentation. Group your customers based on a factor that particularly interests you and aligns with your business priorities. This could be a generic trait like gender, age or geography, or something more specific like order frequency, average order value or order number. (We can advise on the best starting point for your segmentation strategy based on your objectives.)

Once you’ve segmented your customer base, it’s time to think about what you want to test. Again, we recommend starting with the basics before getting more sophisticated.

There are countless elements you can experiment with: content, messaging, copy, design, touchpoints, incentives, sharing methods... the list goes on. In fact, there are so many elements it can be hard to know where to start.

That's why we've written this blog post: to show how brands across sectors are experimenting with their campaigns, and how you can do the same to drive long-term revenue for your business.

We've tested thousands of referral programmes and retention campaigns on behalf of 400+ brands to increase core marketing metrics like new customer acquisition, repeat purchases and customer lifetime value. Sometimes, experiment results are as expected; other times, they're surprising. But always, they're valuable.

Measuring A/B Test Results

Running A/B tests is only valuable if you accurately measure the results. That means going beyond surface metrics like opens or clicks to understand what actually drives business outcomes—such as new customer acquisition, increased average order value (AOV), or improved customer lifetime value (CLV).

Here are four key tips for effectively measuring your A/B test results:

1. Define your success metric upfront

Before you launch any test, be clear on what “success” looks like. Are you trying to increase referrals sent? Improve the conversion rate of referred friends? Drive more revenue per customer? Your goal should directly align with one of your key business objectives—whether that’s growth, retention, or profit.

2. Always test one variable at a time

To ensure clarity, isolate your experiments to a single variable. For example, test incentive type first (e.g., 15% off vs. £10 off). Once you find a winner, move on to other variables like messaging or share method. Testing multiple elements at once (without the right framework) can lead to false assumptions and inconclusive results.

3. Wait until tests reach statistical significance

Jumping to conclusions based on small datasets can lead to bad decisions. Use proper testing methodology and statistical calculators (or platform tools) to determine when your test has gathered enough data to be reliable. If 10 people click a button and 8 prefer version A, that’s not a meaningful winner—500 vs. 2,000 is a different story.

G4. o beyond the first conversion

Success doesn’t end with a referred customer making a first purchase. Track how those customers behave post-conversion. Do they order again? Do they eventually refer others? These downstream effects are critical to understanding the long-term value of your A/B testing decisions.

Bonus tip: Consider segment results, not just overall outcomes

What works for one customer group doesn’t always translate to another. Break down your A/B test results by cohort—e.g., high-spenders vs. low-spenders, new vs. returning customers, mobile vs. desktop users—and look for insights you can use to build more targeted campaigns moving forward.

Roundup

So there you have it: eight A/B tests for optimising your referral and retention programme.

As our examples show, every brand is different. There's only one way to find out what most effectively engages your customers: strategic segmentation and A/B testing.

By continuously testing elements throughout your customer journey, you can develop in-depth insight into what motivates different customers to take desired actions. Then you can use your learnings to create more advanced segments, serve more targeted content, and build high customer lifetime value that generates significant long-term revenue.

Before you start, though, define what you want to achieve from your experiments. Every brand has its own definition of success. Do you want to incentivise NPS promoters to refer friends? Drive more repeat purchases from first-time customers? Increase the AOV of low-spenders?

Identify your key business objectives, then plan a strategic A/B testing roadmap to match (we can help with that).

With 25+ segmentation options and thousands of combinations available with our platform, the potential to learn about your customers and optimise your programme is limitless.

Simon Dring

Read more >

Never miss another update

Subscribe to our blog and get monthly emails packed full of the latest marketing trends and tips